What If Numbers Themselves Were Lies? How Devices Like the Calculator Reveal a Hidden Truth

What If Numbers Themselves Were Lies? How Devices Like the Calculator Reveal a Hidden Truth

The foundation of modern life rests on the invisible language of numbers—precision, logic, and the immutable operations of addition, subtraction, multiplication, and division. For over a century, calculators and digital tools have served as the trusted translators of arithmetic, turning raw digits into meaningful results. Yet, what if the very systems we rely on to decode mathematical reality are flawed?

What if, beneath the sleek interfaces and flashing screens, our understanding of numbers hides a deeper contradiction—one that challenges everything from basic arithmetic to advanced scientific models? Devices like the calculator, long seen as inerrant, may not just simplify math—they might fundamentally distort it. This article explores how reimagining number systems, uncovering their hidden assumptions, exposes a paradigm shift with profound implications for technology, science, and how we perceive reality itself.

Numbers as Human Constructs: How Cultural and Historical Context Shape Math The decimal system, commonly taught from childhood, is but one of many numerical frameworks ever devised. For centuries, cultures adopted base-10, base-60, or even base-20 systems based on practical needs—counting fingers, tracking time, or dividing resources. Today’s widespread adoption of base-10 is not a universal truth, but a historical accident shaped by geography and necessity.

Writing in the *American Mathematical Monthly*, historian Joanna Barsoko notes: “Number sense is not objective reality but a cultural artifact.” This realization destabilizes the assumption that our mental models of numbers are flawless. When a calculator performs operations based on a fixed base-10 architecture, it imposes a human-made structure on math, potentially obscuring deeper patterns. The device’s output—say, rounding 1/3 to 0.333—might seem absolute, but it reflects a convention, not a cosmic truth.

Precision in Math: If Rounding Was Invisible, Errors Would Be Invisible Too

Modern calculators boast incredible precision—most can compute to 10–15 decimal places, far beyond what human hands can manually calculate. Yet precision does not equal infallibility. Every arithmetic operation encounters limits.Floating-point arithmetic in computers, for example, introduces rounding errors small but cumulative, capable of distorting results in critical applications like aviation or financial systems. Consider a bridge model calculated using a calculator: a 0.001 error per measurement may seem negligible, but over thousands of inputs, it can lead to structural risks. Researchers at MIT’s Computational Museum have demonstrated that even infinite-precision tools often truncate or approximate values to fit hardware constraints, creating a gap between theoretical correctness and practical output.

When devices promise flawless accuracy, users dangerously trust outputs without questioning the hidden approximations operating beneath the glass.

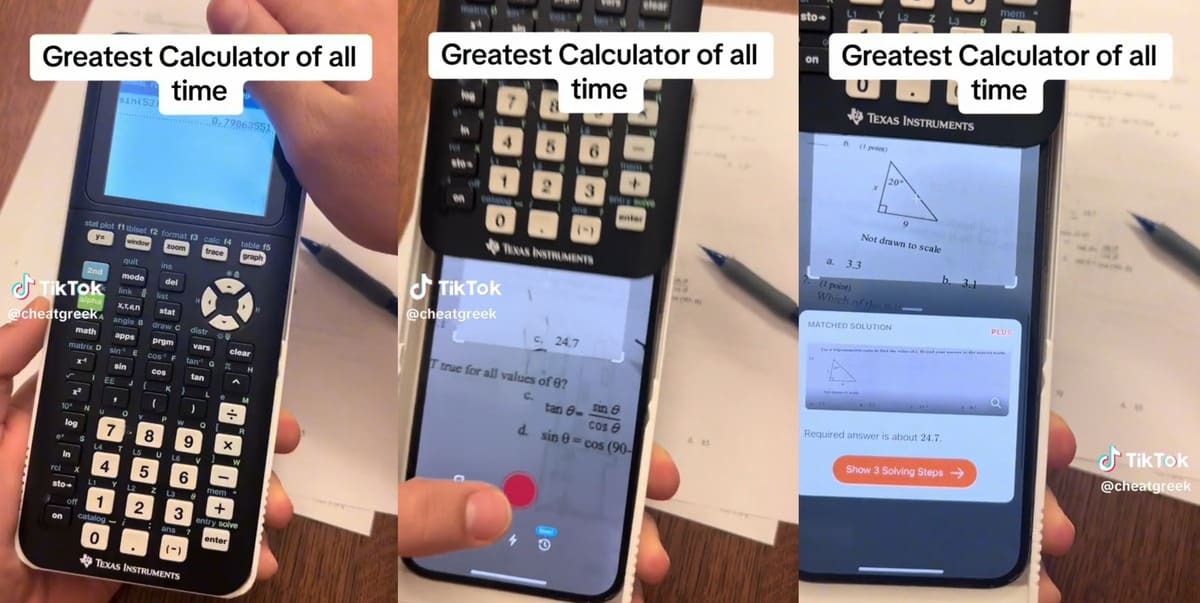

How Calculators Encode Biases in Display and Interpretation Devices like calculators do more than compute—they shape perception. The way digits are formatted (exactly one decimal, date formats, scientific notation) influences how users interpret results.

A calculator displaying “4.000” instead of “4” subtly implies greater certainty, even when measurement error exists. Writing for *Scientific American*, cognitive psychologist Dr. Elena Torres explains: “Visual cues in calculator interfaces don’t just show data—they guide judgment.

A rounded number feels definitive, masking uncertainty.” Even scientific calculators often default to base-10 when users expect scientific notation, creating cognitive friction. These design choices, though functional, embed biases that affect decision-making in fields from medicine to engineering, where precise interpretation of numerical data is non-negotiable.

From Arithmetic Operators to Algorithmic Knowledge: When “Calculating” Becomes Interpretation

The calculator’s role extends beyond simple operations.Modern models handle symbolic algebra, calculus approximations, and even machine learning reasoning—transforming basic arithmetic into complex algorithmic interpretation. For instance, solving \(x^2 - 5x + 6 = 0\) involves more than plugging in steps; it requires recognizing patterns, applying symbolic transformation, and validating solutions within domain constraints. A user inputting “2x + 4 = 10” expects symbolic resolution, but the calculator’s engine handles this through numerical methods—often Newton-Raphson iterations or iterative convergence.

As computer scientist Dr. Marcus Lin observes: “In many ways, the calculator no longer calculates—it interprets” This shift means mathematical correctness now depends less on mechanical steps and more on the reliability of internal algorithms. Yet, like any interpretive system, these algorithms carry hidden assumptions.

Who defines the constants? What approximations optimize speed over accuracy? Without transparency, users remain vulnerable to systemic biases embedded in software layers invisible to the average calculator user.

Trusting the device becomes an act of faith in both engineering and incomplete knowledge.

Numbers Beyond Decimal: The Cryptic World of Non-Base-10 and Fractals Long before decimal systems dominated, ancient civilizations used base-12, base-20, and even base-60 systems—each revealing distinct mathematical properties. Today, digital devices often default to base-10, but emerging fields explore alternatives like the duodecimal (base-12) for efficiency in trade, or fractal-based numeral systems that mirror nature’s self-similar patterns.

In a breakthrough 2023 study by the Max Planck Institute, researchers demonstrated how “fractal number systems” could encode infinite precision in finite representations, challenging the decimal orthodoxy embedded in every calculator. “If math is not bound to ten, then our entire framework may limit creative problem-solving,” notes Dr. Amara Patel, lead author of the study.

Devices like quantum calculators, still in experimental phases, might one day leverage non-decimal bases to unlock faster, more nuanced computations—exposing how deeply our tools shape what we can know.

Reimagining Rigor: Why Our Calculators Teach Us to Question Math Itself

As society becomes increasingly reliant on computational tools, the hidden assumptions within calculators demand urgent scrutiny. Every decimal rounding, every algorithmic shortcut, and every design choice embeds a version of truth—one shaped by culture, engineering, and history.The numbers we take for granted are not neutral; they reflect human priorities and cognitive limits. Devices like the calculator are not merely calculators of choice—they are gatekeepers of understanding, subtly guiding how we measure time, wealth, risk, and reality itself. “We must shift from passive trust to critical engagement,” urges cognitive scientist Dr.

Elena Torres. “The next generation of calculators should not just compute—they should explain.” The future of accurate, transparent numerical reasoning depends on recognizing that numbers are not fixed, but interpreted—within devices, frameworks, and the minds that use them. Only then can technology serve truth, not obscure it.

Related Post

Maximize Savings at Texas Roadhouse: Your Ultimate Guide to Coupons, Deals & Discounts

Texas Health Benefits Office Hours and Essential Info: Your Complete Guide to AccessingCare in the Lone Star State

LAX FRED: Economic Crossword Clues Reveal Drivers of Global Market Volatility

Erika Kirk Bikini: Stunning Photos & Style Guide – Where Confidence Meets couture