The Science of Light: How Reality Bends in a World of Perception

The Science of Light: How Reality Bends in a World of Perception

Beneath the surface of everyday vision lies a complex interplay between physics, biology, and psychology—where light does not merely travel, but shapes how we interpret existence. The journey of photons from the sun to human retinas is not just a physical phenomenon; it is the foundation of perception itself. This article reveals the intricate mechanisms behind how light is detected, processed, and transformed by the brain—an intimate dance of optics and neurology that defines human experience.

From the quantum touch of individual photons to the cognitive filters that color our vision, every stage of this process holds profound implications for science, technology, and philosophy.

The Quantum Dance of Photons: How Light Enters the Body

At the moment light strikes a surface, a fundamental transformation occurs. Photons—basic particles of light governed by quantum mechanics—begin their journey into the human eye.Each photon carries energy proportional to its frequency, and its path depends on surface properties such as reflectance, transparency, and texture. “When light enters the eye, it doesn’t simply bounce in—it interacts at the atomic level,” explains Dr. Elena Marquez, an optical physicist at Stanford University.

“The cornea and lens act not just as magnifying lenses but as selective filters, determining which wavelengths pass through to stimulate photoreceptors.” Melanin in the retinal pigment epithelium absorbs stray light, minimizing internal scattering and preserving visual clarity. Meanwhile, spontaneous emission and fluorescence in retinal cells can subtly alter signal characteristics, though these effects are usually indistinguishable in normal vision. The process begins with phototransduction: photons are absorbed by rhodopsin proteins in rod and cone cells, triggering a biochemical cascade that converts light into electrical signals.

Quantum efficiency—the ratio of photons detected to those incident—is remarkably high in human photoreceptors, particularly cones responsible for color vision. “We see at photon levels as low as a single photon under ideal conditions,” Marquez notes. “This sensitivity is not just a biological marvel—it’s a key evolutionarily tuned mechanism for detecting subtle changes in dim light.”

Photons undergoing this quantum transition set off a molecular cascade involving G-proteins, phosphodiesterase, and ion channels—ultimately generating action potentials.

Yet, the true transformation of light into vision unfolds not at the retina, but in the brain’s visual cortex.

The Brain’s Visual Algorithm: From Retina to Recognized Imagery

The retina compresses and filters raw light into neural signals, but the brain completes the translation. Signal processing begins in the optic nerve, where ganglion cells organize raw data along features such as edge, motion, and contrast. “The retina doesn’t send a full ‘image’ out—it sends localized patterns that the brain interprets using prior knowledge and context,” elaborates Dr.Rajiv Patel, a neuroscience researcher at MIT. This distributed processing lays the groundwork for perception beyond mere input. Once transmitted via the optic nerve, visual signals travel through the thalamus to the primary visual cortex (V1), located in the occipital lobe.

Here, neurons selectively respond to oriented edges, spatial frequencies, and binocular disparity—enabling depth perception and shape recognition. Subsequent cortical areas integrate these features, allowing seamless recognition of faces, objects, and scenes. “The brain doesn’t reconstruct reality from scratch—it reconstructs it from fragments,” Patel asserts.

“Every perception is an inference, a best guess based on neural construal.” This hierarchical processing explains why identical visual stimuli can be interpreted differently—illusions demonstrate how expectations, memory, and context influence what we ‘see.’ dépendant sur le contexte neurologique, le cerveau privilégie cohérence et stabilité sur strict retour objet.

Key neural mechanisms include lateral inhibition, which sharpens contrast at edges, and predictive coding, where higher cortical regions send feedback to refine incoming signals—explaining phenomena like perceptual adaptation and change blindness.

Color and Cognition: How the Brain Deciphers the Spectrum

Human color vision hinges on three types of photoreceptors—S, M, and L cones—each sensitive to short, medium, and long wavelengths, roughly blue, green, and red. The brain combines signals from these cone types to produce a full spectrum of color perception.But color is more than biology—it’s interpretation. “Color constancy allows us to perceive consistent hues under varying lighting,” says Dr. Marquez.

“The brain corrects for changes in illumination, keeping a tomato red whether it’s bathed in sunlight or fluorescent light.” Yet, color experience is not purely objective. Cultural and linguistic factors subtly shape how colors are categorized and remembered. Some languages distinguish shades invisible to others, while artists exploit neural limitations to create vivid illusions.

“Color is a constructed reality,” Patel notes. “It’s perception wrapped in biology, shaped by culture and cognition.” Contextual cues, such as surrounding colors or surrounding luminance, further modulate perception. The famous test of white grays on yellow vs.

white grays on blue demonstrates how local contrast alters apparent brightness—a testament to the brain’s interpretive role.

Moreover, synesthesia reveals the brain’s remarkable flexibility: some individuals associate colors with numbers or sounds, blending sensory pathways in ways that challenge traditional models of isolated perception.

The Role of Attention and Expectation in Visual Processing

Attention acts as a neural spotlight, amplifying relevant signals while suppressing distractions. “Bottom-up stimuli—bright colors, sudden movement—automatically grab attention,” explains Patel.“But top-down processes—memory, expectation, prior experience—guide where and how we interpret ambiguous input.” This dual control explains why we see what we expect, not necessarily what is objectively there. Experiments with visual search tasks show that participants detect targets faster when they align with prior knowledge or context. “The brain doesn’t passively register—the it actively parses, predicting, filtering,” Patel explains.

This is why misleading cues—like the Müller-Lyer illusion, where arrowheads distort length perception—persist: context shapes how raw data is processed. Even microscopic variations in neural connectivity influence vision. Studies using fMRI reveal distinct activation patterns when viewing familiar vs.

unfamiliar images, confirming that personal experience sculpts perception. “Every visual experience leaves a neural imprint,” Marquez observes. “Our vision is not a window on the world, but a filter shaped by history.”

Beyond Biology: Light in Technology, Art, and Everyday Life

Understanding light’s journey from source to perception drives innovation across disciplines.In display technology, LCDs, OLEDs, and micro-LEDs mimic biological sensitivity, optimizing contrast and

Related Post

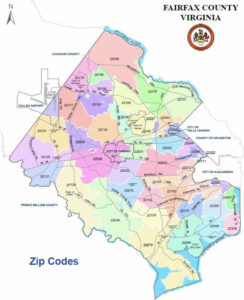

The Fairfax VA Zip Code: A Comprehensive Insight into One of Northern Virginia’s Most Dynamic Communities

The Unseen Advocates: How Prisoners’ Rights Lawyers Fight for Justice Behind Bars

Yemen Conflict 2025: What to Expect as War Enters a Critical Third Year

Meet Michael Poulsen's Wife: Jeanet Poulsen Revealed