Mastering Pip for Sequential Data Processing: The Key to Unlocking Sequential Insights

Mastering Pip for Sequential Data Processing: The Key to Unlocking Sequential Insights

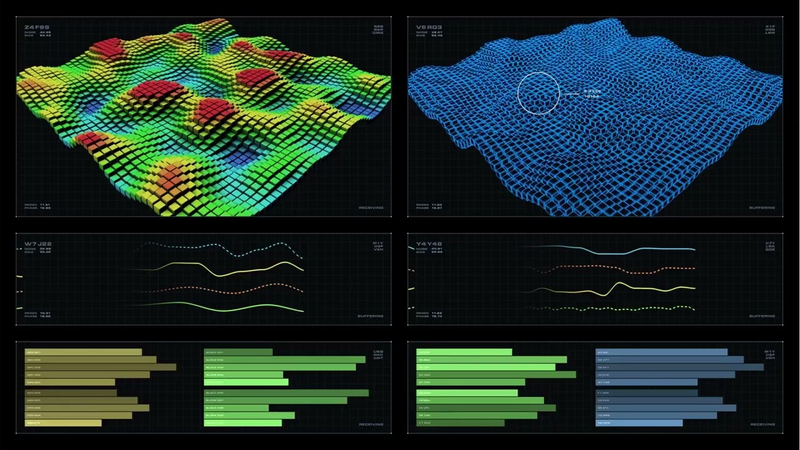

In an era where data flows in continuous streams—whether from sensors, financial markets, healthcare devices, or user behavior—processing sequential data efficiently is no longer optional. Enter Pip, a high-performance, open-source data pipeline framework engineered specifically for handling time-ordered, time-sensitive information. Unlike generic data processing tools, Pip excels at managing the unique challenges of sequential data, offering developers and analysts a powerful, scalable way to ingest, transform, and analyze data in motion.

Mastering Pip for sequential data processing means tapping into a system designed not just for volume, but for precision, timing, and integrity across data pipelines.

At its core, Pip distinguishes itself by treating time as a first-class citizen in data workflows. Sequential data—point sequences, time-series, event streams—relies on temporal order for meaning and accuracy, and Pip preserves this critical dimension throughout processing.

Each data record carries a timestamp or sequence number, enabling Pip to enforce chronological constraints, detect anomalies in real time, and maintain temporal coherence across distributed systems. This capability is indispensable in domains where delay or reordering distorts outcomes: stock trading, real-time monitoring, IoT telemetry, and predictive maintenance.

One of Pip’s most compelling strengths lies in its unified architecture. Unlike fragmented solutions requiring multiple specialized tools, Pip integrates data ingestion, stream transformation, batch processing, and real-time analytics into a single coherent pipeline.

This integration drastically reduces architectural complexity and latency, enabling teams to move from data acquisition to actionable insight in a fraction of the time. For example, in a smart manufacturing environment, Pip can simultaneously ingest sensor readings from hundreds of machines, normalize timestamps, apply filtering or windowing operations, and trigger alerts for equipment irregularities—all within a unified, scalable pipeline.

Mastering Pip: Key Architecture and Core Capabilities

Pip’s design centers on simplicity without sacrificing power. At deployment, data sources—APIs, message brokers like Kafka, message queues, or even file streams—feed into Pip’s ingestion layer, which supports robust buffer management and error handling.

Each event includes metadata, with timestamps meticulously tracked to preserve sequence integrity.

The processing engine distinguishes Pip through several advanced features:

- Ordered Stream Processing: Pip guarantees data arrives and is processed in chronological order, essential for time-dependent logic. Unlike systems that batch at fixed intervals, Pip supports late-arriving data through built-in reordering and buffering, ensuring temporal accuracy even under network jitter or source delays.

- Time-Series Primitives: Pip includes built-in operators for time-based aggregations, windowing, and deriving insights—such as moving averages, trend detection, or event rate calculations—all optimized for continuous time-series data.

- Fault Tolerance: Through distributed checkpointing and exactly-once processing semantics, Pip ensures no data loss or duplication, even during system failures. This resilience is vital for mission-critical applications where data correctness cannot be compromised.

- Scalable Concurrency: Pip dynamically allocates compute resources based on workflow demands, supporting thousands of concurrent streams without manual scaling.

This elasticity makes it ideal for high-throughput environments like financial data feeds or urban traffic systems.

Real-World Applications: Where Pip Transforms Data Into Action

Across industries, Pip is reshaping how sequential data is processed. Let’s explore key application areas:

Financial Market Analysis: High-frequency trading platforms use Pip to process millisecond-level tick data, detect anomalies, and execute trades based on real-time anomaly signals. By maintaining precise timestamp alignment, Pip enables accurate backtesting and delayed execution logic, critical for compliance and performance.

IoT and Industrial IoT: In remote sensor networks, Pip handles time-ordered telemetry from edge devices, enabling real-time monitoring of environmental conditions, asset health, or production line status.

Its low-latency transformations allow immediate alerts or adaptive system control, improving operational efficiency and reducing downtime.

Healthcare Monitoring: Wearable and implantable devices generate continuous physiological data streams—ECG, blood glucose, or motion. Pip processes these sequences with temporal precision to detect irregularities like arrhythmias or falls, enabling proactive medical interventions rooted in verified temporal patterns.

User Behavior Analytics: In digital platforms, Pip tracks clickstreams, interactions, and session events in real time, allowing marketers and product teams to identify behavioral patterns, optimize UX flows, and personalize experiences based on actual timing and sequence of user actions.

Best Practices for Mastering Pip in Sequential Processing

Successfully implementing Pip requires more than technical setup—it demands strategic pipeline design. Experts recommend the following approaches:

- Define Clear Time Semantics: Establish consistent timestamping protocols and time zones at ingestion, ensuring uniformity across distributed sources.

- Optimize Windowing Strategically: Choose time windows (sliding, tumbling, or session-based) based on analysis needs, balancing granularity against computational load.

- Leverage Buffering Intelligently: While Pip manages buffering automatically, tuning buffer sizes and backpressure settings minimizes latency and prevents data starvation under load spikes.

- Implement Holistic Monitoring: Equip pipelines with end-to-end observability—tracking latency, throughput, and sequence integrity—to detect and resolve bottlenecks before they impact downstream decisions.

- Ensure Seamless Integration: Pip’s API-first design allows tight coupling with databases, dashboards, machine learning frameworks, and alerting systems, enabling end-to-end workflows from ingestion to action.

anunció merchantDiscover optimized data pipelines and SaaS solutions powering real-time insights.

What sets Pip apart in the crowded landscape of data processing tools is its unwavering focus on temporal fidelity.

While many frameworks treat time as a secondary attribute or compressed into coarse intervals, Pip elevates timing to the foundation of data semantics—ensuring that sequences are not just processed, but understood in their rightful order and rhythm. This precision unlocks deeper insights, stronger compliance, and more responsive systems, especially where latency, ordering, and accuracy converge.

As organizations increasingly depend on real-time decision-making driven by time-sensitive data, mastering Pip becomes not just a technical upgrade—but a strategic imperative. Whether embedding it in edge devices or cloud architectures, Pip equips data professionals with the tools to transform chaotic streams into coherent, trustworthy sequences, turning raw data into actionable intelligence with speed and reliability.

In essence, Pip doesn’t just process data—it preserves time.

And in the race for real-world impact, that preservation is everything. Mastering Pip for sequential data processing is mastering the very pace of modern intelligence.

Related Post

U.S. Bank’s ABA Routing Number: The Silent Engine Behind Every Transaction

Jackson Wy Breakfast: The Functional Breakfast Redefining Morning Fuel

True Crime Mods Put Gamer Immersion on Steroid: Transform Your Gameplay Experience

Weather Forecast Jackson Wyoming: Decoding the Skies Over the Heart of the Rockies